The easiest way to make your LLM output reliable

Discover the best prompts for your goals with the autonomous prompt engineer.

Reduce error rate in LLM

outputs effortlessly

outputs effortlessly

Get reliable outputs

Test 1000+ prompts

Unlock production-ready LLM performance without cumbersome model fine-tuning.

Automatically generate, evaluate, and refine prompts at scale, aligned with your goals.

Observe winners

Use our LLM algorithm to discover prompt patterns that boost your output quality.

SET PARAMS

START TUNING

SEE RESULTS

1. Choose your goal. Pick from a range of predefined objectives to set your LLM's direction.

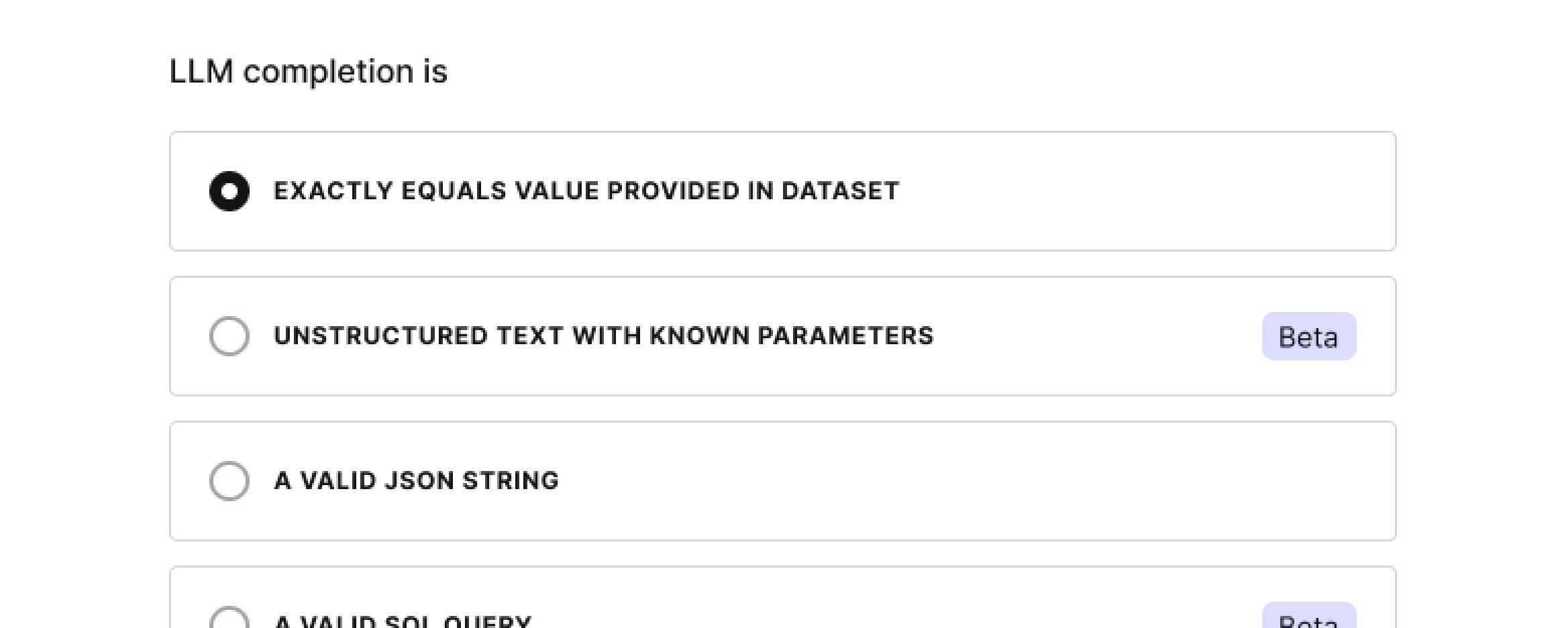

2. Set parameters. Upload your data, adjust the LLM settings, and draft your initial prompt.

4. See results. Watch your prompt improve and get the one that leads your LLM to the optimal output.

How it works

CHOOSE GOAL

3. Start tuning. Define tuning preferences and launch the auto-optimization.

3. Start tuning. Define tuning preferences and launch the auto-optimization. No integration required.

Tune prompts for any

type of LLM output

type of LLM output

STRUCTURED TEXT

Get the required formats like JSON and RegEx to power your LLM applications.

CODE GENERATION

From SQL to Python - generate bug-free code that follows the guardrails.

UNSTRUCTURED TEXT

Guide LLM to enhance the quality of QA, summarization, and other tasks.

CLASSIFICATION

Improve the accuracy of LLM-powered binary and multiple classification tasks.

Supported models